The Need for CyberInfrastructure at the University of Washington

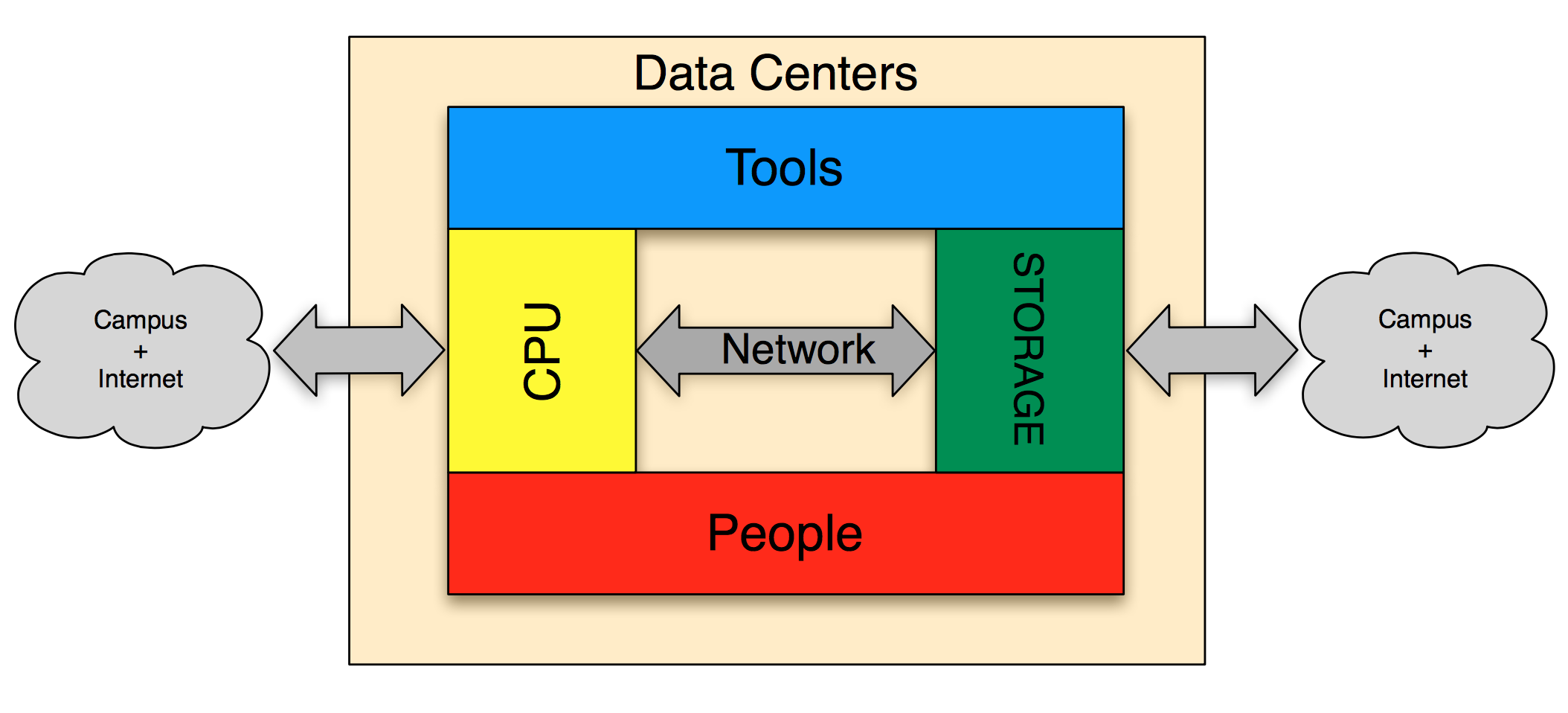

Cyberinfrastructure is the coordinated aggregate of software, hardware and other technologies,

as well as human expertise, required to support current and future discoveries in science and engineering.

The challenge of Cyberinfrastructure is to integrate relevant and often disparate resources to provide

a useful, usable, and enabling framework for research and discovery characterized by broad access and ?end-to-end? coordination.

-- Francine Berman (February 18, 2005). "SBE/CISE Workshop on Cyberinfrastructure for the Social Sciences".

San Diego Supercomputer Center.

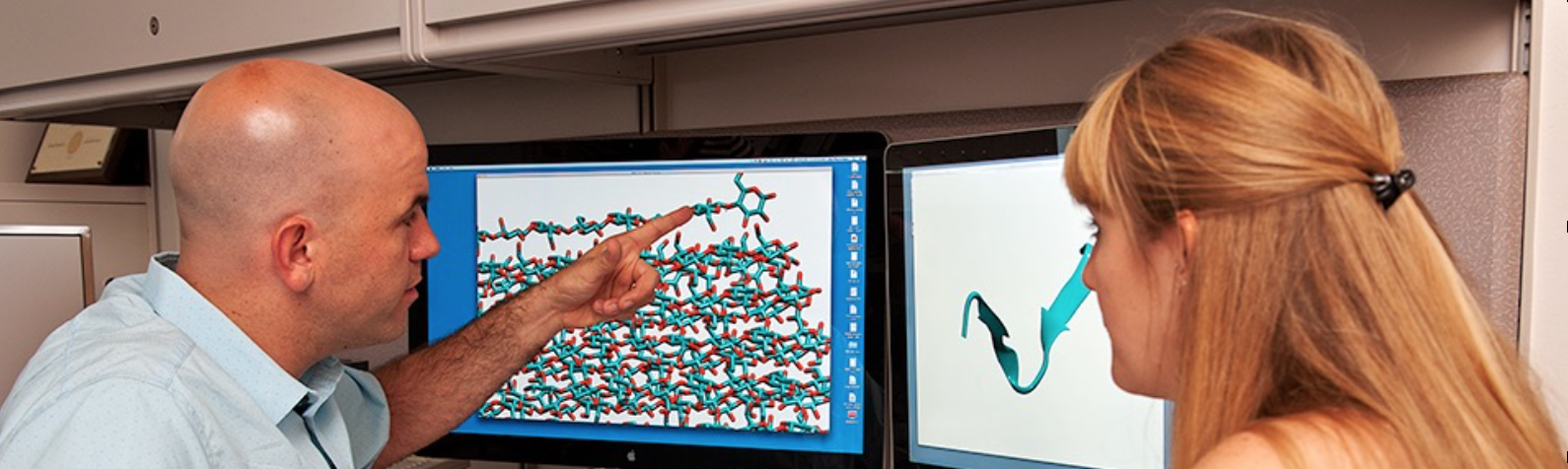

An extensive ``conversation'' with UW research leaders was conducted to determine the

then present and anticipated future HPC, and more generally, cyberinfrastructure,

needs of the UW. This process involved more than 50 UW-IT staff and 120 researchers, with the researchers selected

based upon their funding profile and international stature.

This conversation was designed to understand the future directions of research and the associated technology, resources and service needs.

The outcome of this survey was that there are essential needs for

IT and data management expertise

Data management infrastructure

Computing power

Communication and collaboration tools

Data analysis and collection assistance

A number of significant reasons were identified during this survey for the UW to invest significant resources in HPC and cyberinfrastructure.

Logistical Reasons :

Faculty Recuitment and Retention. As HPC and cyberinfrastructure are now an integral part of cutting edge research programs, both attracting new faculty members and retaining the ones already at UW, requires the UW to have cyberinfrastructure that can support such programs.

Data Center Space Crisis. The UW was paying to use data centers to store large amounts of data. The anticipated growth of data volume made this an untenable mode going forward, and alternate plans had to be made.

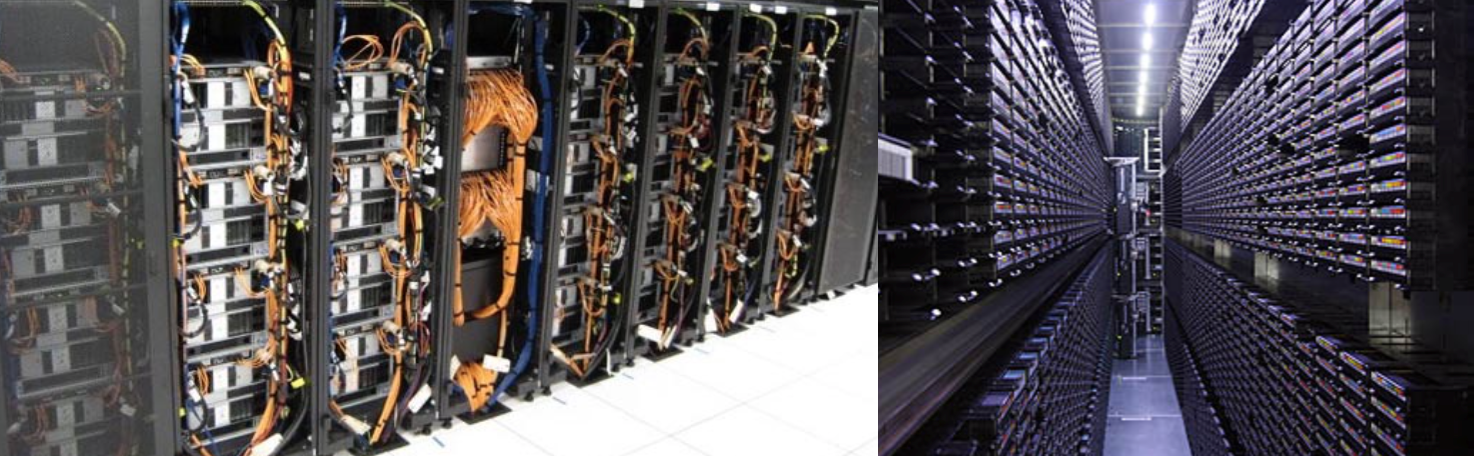

Cyberinfrastructure at Scale. It was the case, that researchers that required computing were maintaining their own,

or department-wide, computing infrastructure within their own buildings, and so the UW had a large number of

duplicated facilities (approximately) to house computers, storage and networking equipment with varying degrees of

energy efficiency, and each requiring a small workforce to maintain and operate.

Consolidation of these smaller facilities and infrastructures would result in significant energy savings and a more efficient workforce.

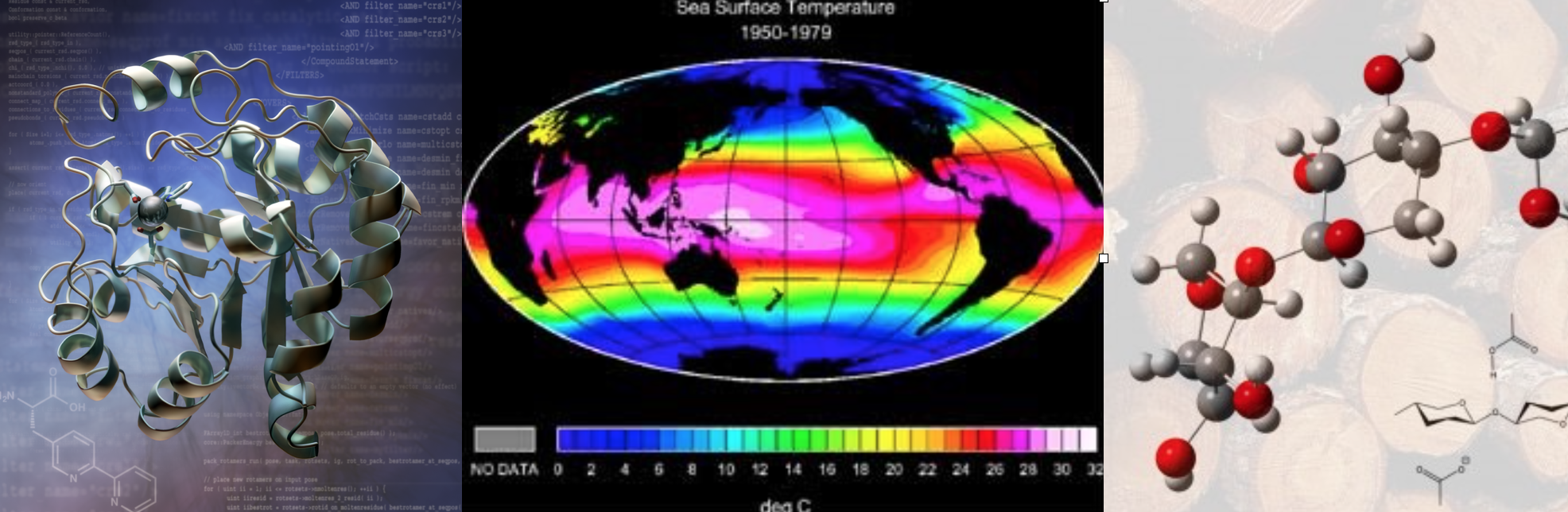

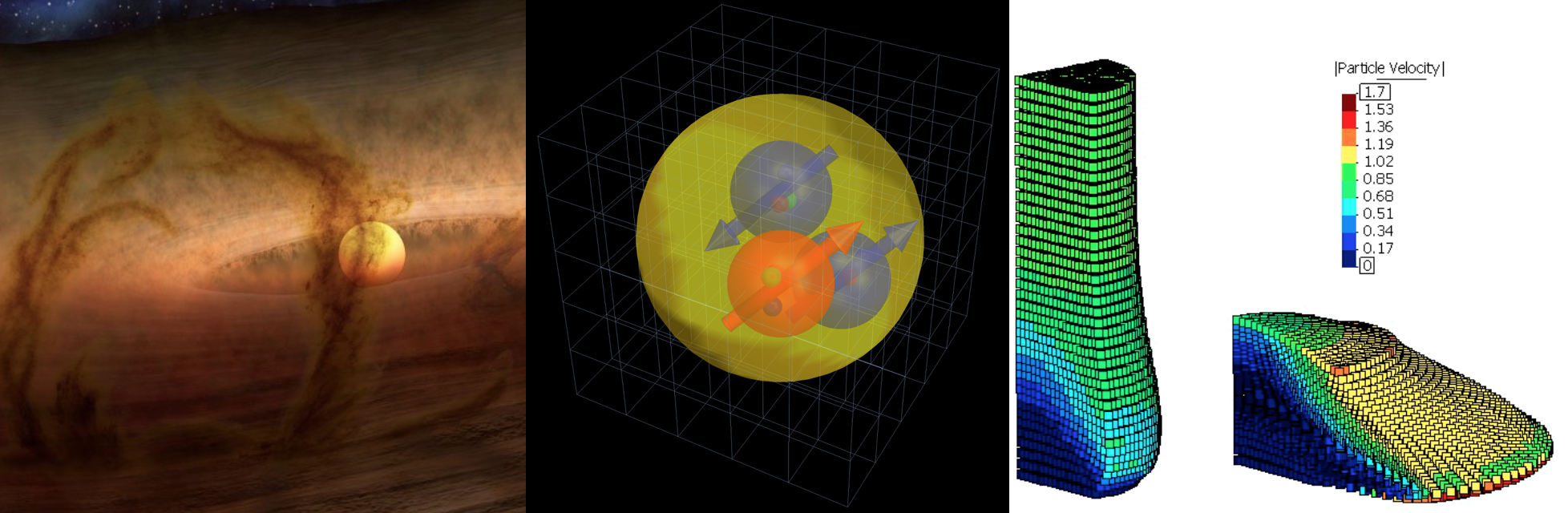

Science Reasons :

The Speed of Science.

When researchers have new ideas about new avenues to pursue, new algorithms or new workflows, it is important that they are able to test them somewhat quickly and determine how they will scale to large numbers of compute cores and high data volumes.

Typically, acquiring HPC resources at national supercomputing centers requires that the

proposed code, algorithms and workflows already run at scale, and the time from proposal submission to resources being allocated takes months.

Local on-demand HPC resources eliminate this barrier, providing the HPC environment to prepare codes and science programs to run at scale on leadership-class computing facilities,

enabling UW researchers to be more competitive at the national level.

Further, they provide resource for students to learn to work with advanced computational tools.

Preparation for Petascale and now Exascale.

Preparing to optimally use the world's most capable supercomputers to address the most important scientific challenges

is a complex and coordinated effort requiring collaboration between researchers with a range of expertise,

from domain scientists to applied mathematicians to statisticians to computer scientists.

Having access to scaled-down computational hardware that has the same structure and responds the same

way as the leadership-class computational platforms is essential

for UW researchers to be able to prepare to lead research programs requiring such resources.

Big Data Pipelines.

The continued proliferation of data being generated by experiments, simulation and society requires that the UW provide the capabilities to

move large amounts of data quickly.

With the enormous number of data-collecting experiments and simulations

being performed on a daily basis physically at the UW, significant data-handling and processing capabilities are need to be

located nearby, and with a fast connection to the outside world.

In addition, UW researchers are members of large international collaborations that collect data at facilities located around the world,

fractions of which need to be moved to the UW for subsequent analysis.

Data Privacy for ``Cloudy'' Workloads.

The need for data privacy precludes use of the private sector cloud computing platforms for sensitive research projects.

It was around 2010 when the UW, as an institution, made significant investments to address the HPC and cyberinfrastructure needs of UW researchers, with a rough timeline for the process:

2000 : sharp increase in computational research, created by the appearance of Linux, cheap CPUs, Beowulf clusters, etc).

2005 : the UW data centers overwhelmed by the volume of data. Discussions about ``data science'' began.

2007 : Vice President for Research, Mary Lidstrom, convenes forum to discuss solutions to the data center problem.

2007-2008 : Conversations with the UW research leaders (discussed above).

2008 : Physics and Astronomy deploy the Athena cluster - 1024 CPUs sustaining approximately 1 Tflop

2008 : Vice President for Research hires the UW's first eScientist - Chance Reschke.

2008 : The eScience Institute Rollout event ,

blog

- this institute was designed to be the conduit supporting HPC and data needs of UW researchers.

Savage's presentation.

2010 : Hyak, Lolo and a primitive Science DMZ were launched - supported by funding from the UW and NSF.

2014 : High speed research network and science DMZ.