INSTITUTE FOR NUCLEAR THEORY

News

Home | Contact | Search | News archive | Site Map

|

INSTITUTE FOR NUCLEAR THEORY News

Home | Contact | Search | News archive | Site Map |

|||||

Extreme Computing and its Implications for the Nuclear Physics/Applied

The program was organized with the goal of keeping the nuclear physics community an active participant in planning for exascale computing. The field has several important applications that are computationally limited, including lattice QCD; various ab initio nuclear structure methods (diagonalization of large sparse shell-model matrices, fermion Monte Carlo, variational methods, and density functional theory); and the simulation of explosive astrophysical environments such as supernovae. Were a factor of 1000 increase in computing power to become available, nuclear physics would be one of the fields with the most to gain.

with each week organized by a subset of the organizers. Apart from the workshop week, we followed the standard INT format, with one or two morning seminars, followed by individual research and informal discussions in the afternoons. The workshop week was quite intense, with broad-ranging talks that touched almost all aspects numerical nuclear physics, complement by a series of presentations from the applied math and computer science community describing the tools they are developing for extreme-scale computing.

Mathematics/Computer Science Interface

(INT Program June 6 - July 8, 2011)

Reported by W. C. Haxton; Organizing Committee: Joe Carlson, George Fuller, Tom Luu, Juan Meza,

Tony Mezzacappa, John Negele, Esmond Ng, Steve Pieper, Martin Savage,

James Vary, Pavlos Vranas

Date posted December 8, 2011

![]()

Most of the organizing committee had taken part in the DOE Nuclear Physics Grand Challenge meeting that was held in Washington D.C. in January, 2009. This INT program was proposed shortly thereafter as a means of maintaining the Grand Challenge momentum and continuing discussions. In particular, we hoped the INT meeting would allow us to continue the dialog with the applied mathematics and computer science communities whose help is increasingly important to efficient use of new and more complex computing architectures. The theoretical nuclear physics community is not large, nor does it include many discipline scientists who are competent to address complex algorithm and programming problems. Just as our experimental colleagues were challenged three decades ago to make the transition from small-scale nuclear experiments to flagship accelerator facilities demanding large collaborations and a great deal of technical support, nuclear theory is now facing the challenge of evolving its numerical physics program rapidly enough to keep pace with hardware developments.

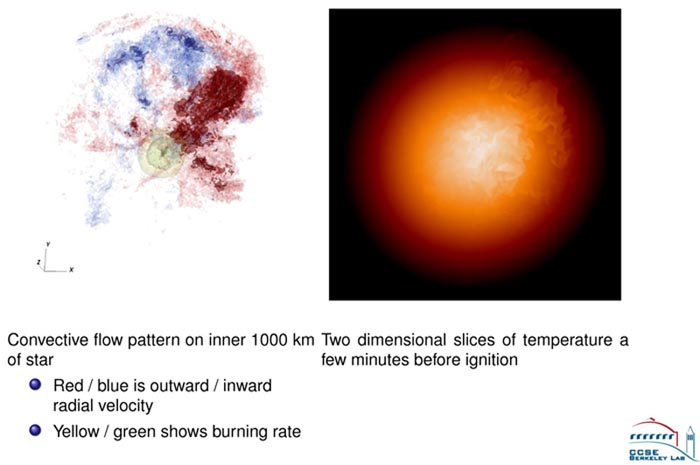

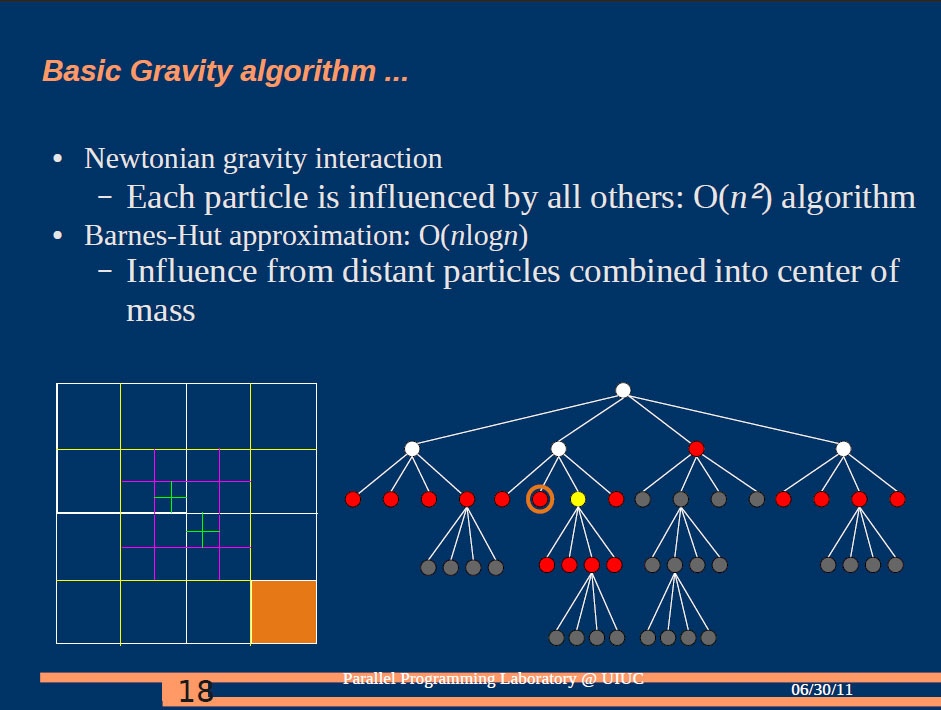

The workshop week was the program highlight because of success in attracting an interdisciplinary group, and because of the wide-ranging discussions. The format generally paired nuclear theorists (or theorists from closely related fields) describing their computational work, followed by related presentations from an applied math/computer science viewpoint. The first day focused on multigrid methods and adapted mesh refinement, with applications coming from lattice QCD and astrophysics. Brower discussed factor-of-20 execution improvements obtained by implementing multigrid methods in lattice QCD calculations. John Bell described AMR methods in explosive astrophysics, and described the challenges such techniques will pose when implemented on new machines with reduced memory per core, placing a premium on intelligent memory access strategies. Rob Falgout described LLNL efforts to develop general-purpose packages for scalable multigrid applications. On the second day of the workshop Chao Yang described LBNL efforts to develop improved parallel methods for solving large-scale eigenvalue problems for sparse matrices, while Pieter Maris discussed the implementation of such methods in the no-core shell model. On Wednesday there followed a series of talks focused on tools that could help users, with applications to performance, optimization, and data management. Alexandre Chorin describe a new algorithm development of possible relevance to nuclear physics, chainless Monte Carlo. A series of talks on efforts to exploit GPUs for nuclear physics was described, including applications to lattice QCD and the astrophysics N-body problem.

The workshop and program were an important step in the nuclear physics community's efforts to come to grips with evolving hardware that will render existing codes obsolete, and require significant changes in computing strategies and algorithm development, as well as in the sociology of high performance nuclear physics computing. The program gave nuclear physics an opportunity to hear the perspectives of applied mathematicians and computer scientists, as they described the expected evolution of HPC approaches to our problems over the next five and ten years. Big questions remain: What should we be doing to produce a coherent plan that will keep our computational toolboxes current and allow us to use coming generations of machines? What is the right "model" for such systematic HPC development within a domain science? But one of the program's modest goals - bringing the nuclear physics, applied math, and computer science communities together so that discussions and potentially collaborations can continue - was realized.

Figure 1: AMR simulations of white dwarf convection and ignition. From John Bell.

Figure 2: Tom Quinn's depiction of the basic N-body algorithm for modeling gravitational collapse

on a GPU machine.